Enterprise AI: Build or Buy?

Download Guide

Prompt engineering taught us how to communicate with models. It didn’t teach models how to reason within the constraints of real systems. As enterprises move from copilots to autonomous agents, the challenge is no longer linguistic - it’s architectural. The question isn’t how we ask, but what environment the model reasons within: one defined by data structure, system state, and governance. Context has become that environment - the operational substrate that anchors reasoning to reality. It’s the foundation that makes agentic systems safe, reproducible, and aligned with how organizations work.

In the early days of building agentic systems, we focused on the obvious challenges - reasoning, planning, and orchestration. But as we scaled from single-use copilots to production-grade autonomous agents, one theme kept resurfacing across every experiment, every failure mode, and every postmortem: The hardest problem in scaling agents isn’t reasoning. It’s context. When agents operate in complex, interdependent enterprise environments, intelligence without context is unsafe.

Large language models are extraordinary at pattern completion. But they are also stateless. Each interaction is a probabilistic snapshot, disconnected from the history, structure, and governance of the enterprise.

In a demo environment, this is fine. In a real company - where a workflow touches finance, compliance, and customer systems - it’s a liability. Enterprise AI systems don’t just need accuracy; they need alignment with how the organization actually works. That requires persistent, structured context.

Most large language models are stateless. Each inference is a self-contained event - a probabilistic reasoning pass that forgets what came before. In a sandbox, that’s fine. But in a live enterprise workflow, where actions span finance, compliance, and customer systems, it’s a liability.

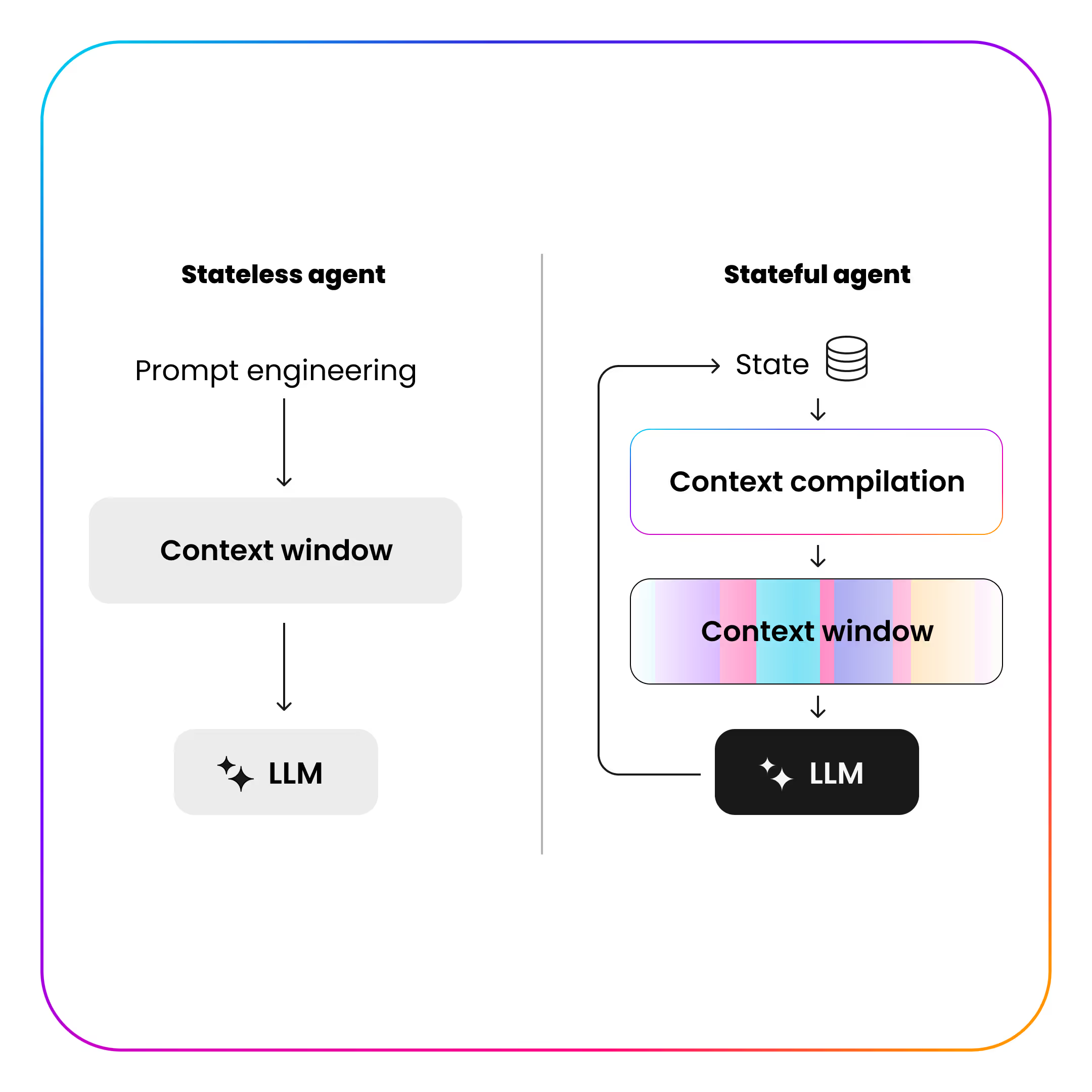

A stateless agent can’t recall what policy it applied yesterday, what exception was approved last week, or which record it already modified. Every decision starts from zero, forcing engineers to reload background data, reapply rules, and revalidate logic at every step.

A stateful agent, by contrast, carries persistent context - a structured memory of what’s happened, under what governance, and within which system state. It doesn’t “remember” text; it maintains structured meaning: entities, roles, permissions, and temporal dependencies.

This shift - from stateless reasoning to stateful systems - is a key factor in agentic AI success. The model remains probabilistic, but the system becomes deterministic. Context, not prompts, is what carries intelligence forward safely.

Context is everything that shapes a decision but isn’t written into the prompt:

Together, these form the invisible architecture that gives AI actions continuity and meaning. Without it, an agent is just a reasoning engine without grounding - clever in isolation, but disconnected from the world it’s supposed to operate in.

When an agent forgets what it already knew, the system pays a cost. Each time a model invocation runs without prior state, it must reload background, reconnect to data sources, and revalidate assumptions from scratch. This constant rehydration consumes more compute, time, and energy. Over hundreds or thousands of runs, the expense compounds — both monetarily and operationally. Without persistent context, every interaction becomes more resource-intensive and harder to manage at scale, turning context loss into a structural inefficiency rather than just a technical inconvenience.

It shows up in familiar ways:

Teams often try to patch around these gaps with retrieval layers, local caches, or memory databases. These work in isolation but don’t scale — they duplicate logic, drift from policy, and fail under governance requirements.

Without an explicit, persistent model of context, you end up with brittle point solutions that can’t be trusted to act safely or consistently. To make agentic systems reliable, context has to be treated as a first-class system primitive - managed, versioned, and shared across every agent and workflow.

For most of the past two years, the conversation around improving AI performance has centered on prompt engineering - finding the right phrasing, format, or instruction style to coax a model into better behavior. It was a useful phase of learning, but it had clear limits.

Prompt engineering treats intelligence as something that can be coached through language. It’s about linguistic clarity - asking questions more precisely, stacking few-shot examples, or chaining prompts for multi-step reasoning. But prompts don’t give models grounding. They don’t carry forward meaning, data relationships, or policy. They simply restate intent each time. In small, single-turn tasks, that’s enough. In enterprises - where systems, roles, and decisions are interdependent it isn’t.

Context engineering represents a deeper, structural shift. It’s about designing the environment in which reasoning happens, not just the words that trigger it.

Where prompt engineering optimizes for output phrasing, context engineering optimizes for semantic integrity - ensuring that every AI decision reflects the organization’s true data, logic, and governance.

You can think of it as moving from prompt craftsmanship to systems architecture.

Context engineering introduces a new set of design primitives - all borrowed from software and data engineering, not linguistics.

When these pieces come together, context becomes a structured substrate for reasoning - one that’s reusable, traceable, and aligned with real-world constraints.

Each retrieval or memory layer solves only a local problem - improving recall for one agent, or preserving short-term memory within a single workflow. But these components don’t share state, semantics, or governance globally. Without a unifying layer, enterprises end up with context silos: isolated memories that drift, duplicate logic, and lose compliance visibility.

It’s the same pattern we saw in early data systems - when every team built its own pipeline until data warehouses unified the model. Context is that missing unifying layer for reasoning systems: the foundation that connects agents, data, and governance under one consistent state model.

Most current implementations stop short of true context engineering:

When context is engineered properly, agents no longer need to guess structure or infer rules from patterns. They can reason over explicit meaning - the same definitions, hierarchies, and guardrails humans rely on.

That means:

In practice, this is what turns an AI agent from a conversational interface into a reliable system component - one that can make and justify decisions within the same semantic and policy framework as the enterprise itself.

Most of the industry still treats “context” as a model problem - something to fix by feeding the model better inputs. If the model hallucinates, we add retrieval. If it forgets, we add memory. If it reasons poorly, we fine-tune. But these approaches assume the model is the system. In the enterprise, it isn’t.

Agents don’t fail because the model can’t read - they fail because the infrastructure around the model doesn’t preserve meaning, state, or governance. Scaling context isn’t about larger context windows or better embeddings; it’s about system design:

These are engineering problems, not linguistic ones. They belong to the same domain as distributed systems, data lineage, and state synchronization - not to prompt crafting.

The truth is, context infrastructure determines long-term reliability. A model can generate answers; only infrastructure can guarantee that those answers remain consistent, compliant, and explainable. That’s why the next generation of enterprise AI platforms won’t compete on model size - they’ll compete on how well they manage and serve context.

At Unframe, we created the Knowledge Fabric because every enterprise we worked with was rebuilding the same context layer - again and again. Each team tried to synchronize data models, enforce governance, and align semantics across their own agents. Each solved a small piece of the problem, but on their own none of them could solve the problem in its entirety.

So we approached context as a common layer that can extract meaningful relationships amongst data, automating everything agents need to reason safely. The Knowledge Fabric sits between enterprise data systems and AI execution layers. It’s a context control plane that maintains structure, lineage, and policy across all AI workflows.

Here’s what it does in engineering terms:

This architecture transforms context from an unstructured prompt artifact into a managed state layer - versioned, queryable, and continuously aligned.

It’s how enterprises finally achieve deterministic behavior from probabilistic systems: not by controlling the model itself, but by controlling its environment. When context becomes infrastructure, agents inherit understanding safely, and reasoning becomes reproducible by default.

The story doesn’t end with building context - it begins there. Once context becomes interoperable, enterprises won’t build single agents; they’ll orchestrate networks of agents that share reasoning, governance, and state through the same contextual substrate.

Every agent becomes a node in a distributed reasoning fabric - collaborating through shared semantics rather than static APIs. Context feedback loops keep this system self-correcting: each decision updates the contextual graph, and every clarification refines governance alignment.

Three shifts are already visible on the horizon:

Enterprises that master context will move faster and safer than those still tuning prompts. They’ll build systems that don’t just automate decisions, but understand the organizational reasoning behind them.

Because the next phase of enterprise AI isn’t about bigger models - it’s about context layers that continuously learn, govern, and align. That’s where the real compounding returns begin.