Enterprise AI: Build or Buy?

Download Guide

Great momentum in 2025 led to breakthrough platform updates that are changing how enterprises run AI in production.

Over the past year, we focused on a practical problem: deploying AI inside real operational systems.

AI solutions live in production operate under uncertainty, scale, and governance constraints - and they are expected to behave predictably over time. The platform capabilities we shipped in 2025 reflect this reality. We invested in controls for uncertainty, execution, observability, and governance, so AI can run safely inside mission-critical workflows.

Below are our top seven platform updates from 2025, and what they unlocked for our customers.

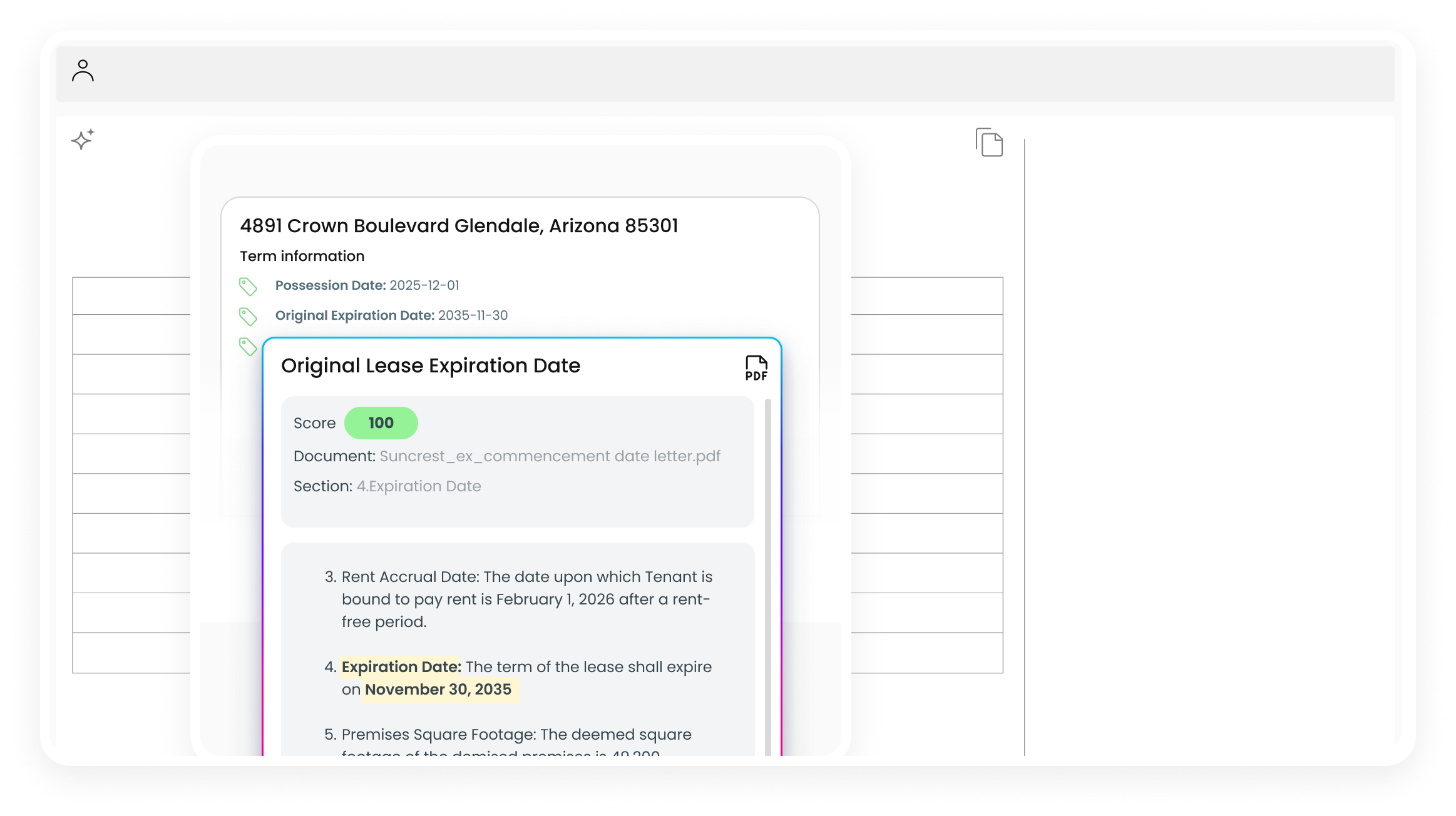

Data extraction systems often produce plausible outputs. Downstream systems assume correctness unless told otherwise. In production, those silent errors propagate - and become operational risk.

In 2025, we significantly strengthened Unframe’s confidence engine for structured and semi-structured data extraction. Confidence is now evaluated at the field level and is used directly during workflow execution. Low-confidence values can automatically trigger retries with alternate extraction strategies, route to human review, or be blocked from downstream systems altogether.

What this enabled: Our solutions enable extracted data to be used directly in critical workflows - without increasing manual review costs or operational risk.

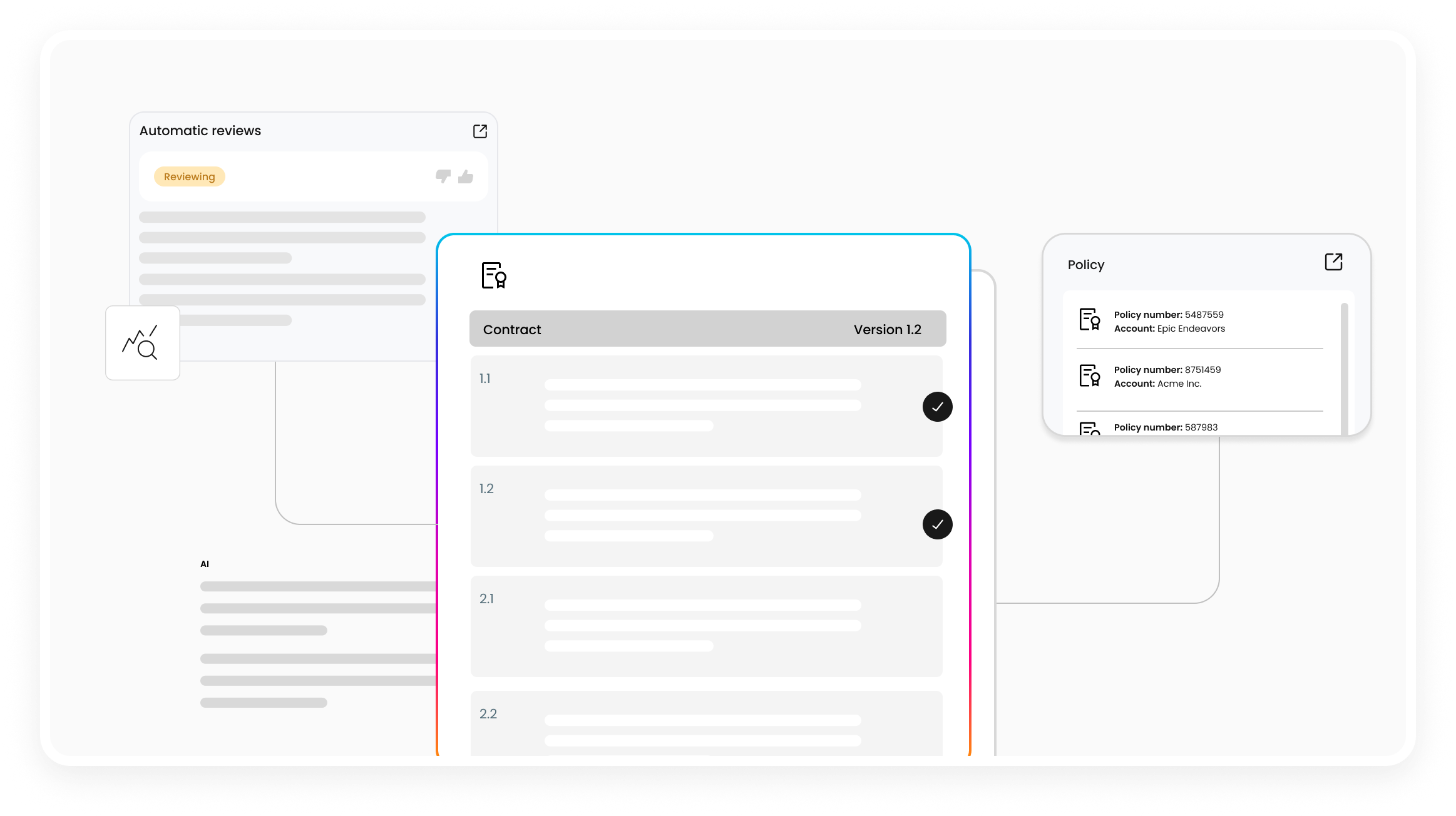

As AI becomes embedded in production systems, outputs increasingly reach customers, users, or systems of record. In high-impact scenarios, unvalidated outputs introduce unacceptable risk.

This year, we expanded guardrail capabilities across all LLM-based workflows. Validation rules, schema checks, and approval gates are now enforced during execution. Human review is a first-class execution mode and can be triggered automatically based on confidence thresholds or policy rules. Crucially, evaluation logic persists even as prompts, models, or data change.

What this enabled: Our solutions can be deployed in customer-facing and high-risk workflows while retaining oversight, accountability, and control.

Even well-designed AI systems degrade over time due to data drift, prompt changes, scale effects, or unexpected edge cases. Without observability, these failures remain invisible until they cause downstream impact.

We enhanced monitoring across Unframe’s AI pipelines to expose execution health, failure patterns, confidence trends, human review activity, and usage adoption over time. These signals reflect both system performance and how AI is actually used in production.

When workflows degrade, teams can determine whether issues are systemic or isolated, introduce human review where needed, and adjust behavior without rebuilding the system.

What this unlocked: Our solutions operate at scale, can be intervened proactively, and improved continuously after deployment.

Even capable AI systems fail to reach production when deployment options don’t align with enterprise infrastructure requirements. Data residency, network isolation, and security constraints are often non-negotiable.

This year, we refined Unframe’s deployment and implementation patterns to support both cloud and on-prem environments - while maintaining consistent architecture, security controls, and operational guarantees. AI workflows behave the same way regardless of where they run.

What this unlocked: Organizations can adopt AI without changing their infrastructure strategy or compromising security requirements.

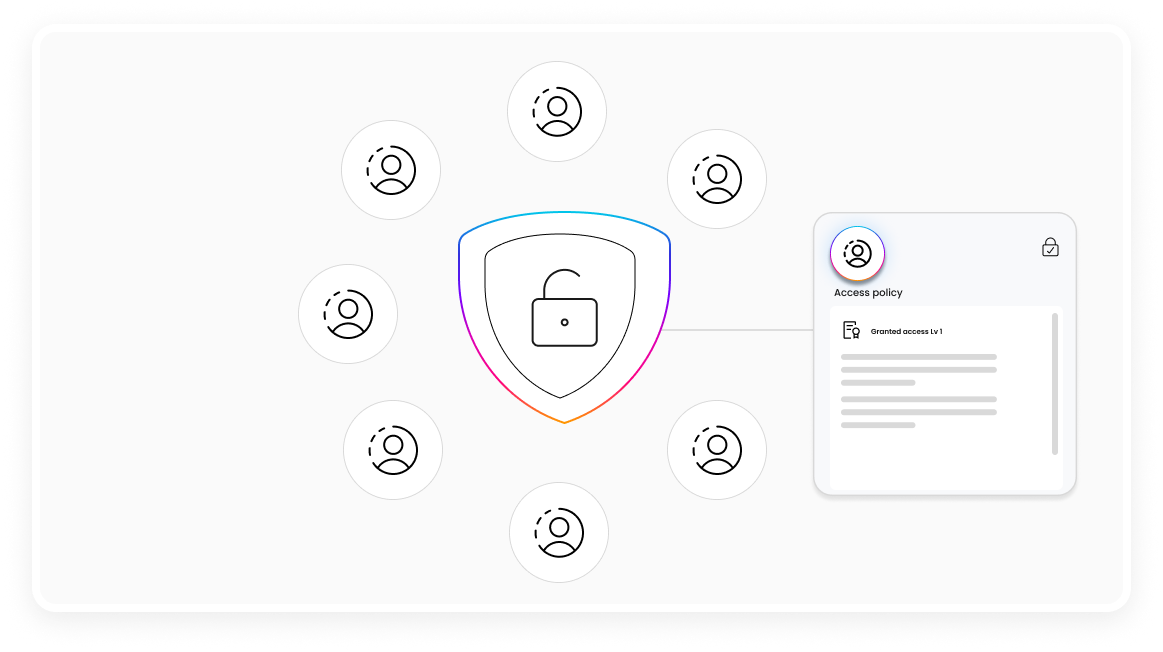

As AI adoption scales, access control becomes a system requirement. Informal permissions don’t work in production.

We strengthened security across the Unframe platform by enforcing role-based access control (RBAC) across data, workflows, prompts, logs, and configuration. Tenant isolation is built in. Permissions are scoped by responsibility and enforced at runtime.

In multi-team environments, finance, operations, and support teams can operate independently. Only approved administrators can modify production workflows or view sensitive traces.

What this unlocked: Enterprise AI adoption that meets security requirements without expanding risk or exposing sensitive data.

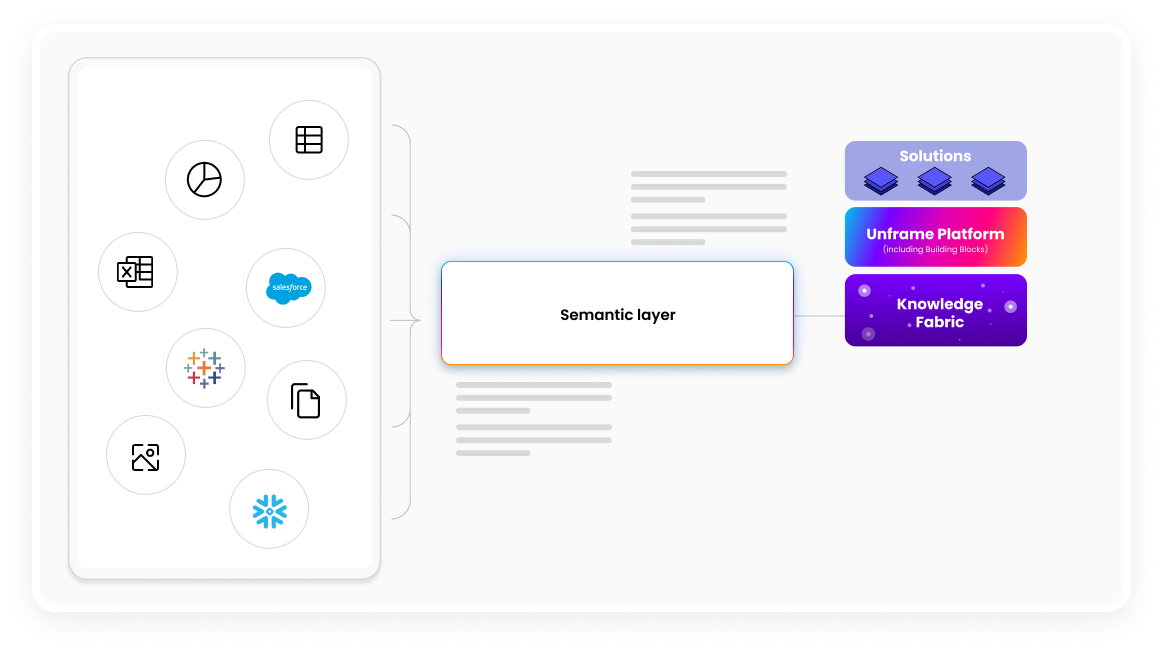

AI workflows built directly on raw data don’t scale. Schemas change. Sources multiply. One-off logic breaks. Without shared context, reuse and consistency fall apart.

We strengthened Unframe’s semantic layer to normalize structured and unstructured data into consistent domain concepts. AI workflows now reason over enterprise context rather than individual documents or tables. This abstraction decouples workflows from underlying data formats, allowing logic to remain stable as schemas evolve.

What this unlocked: Reusable AI workflows that stay accurate and consistent as data, domains, and business requirements change.

Read more about our Knowledge Fabric here.

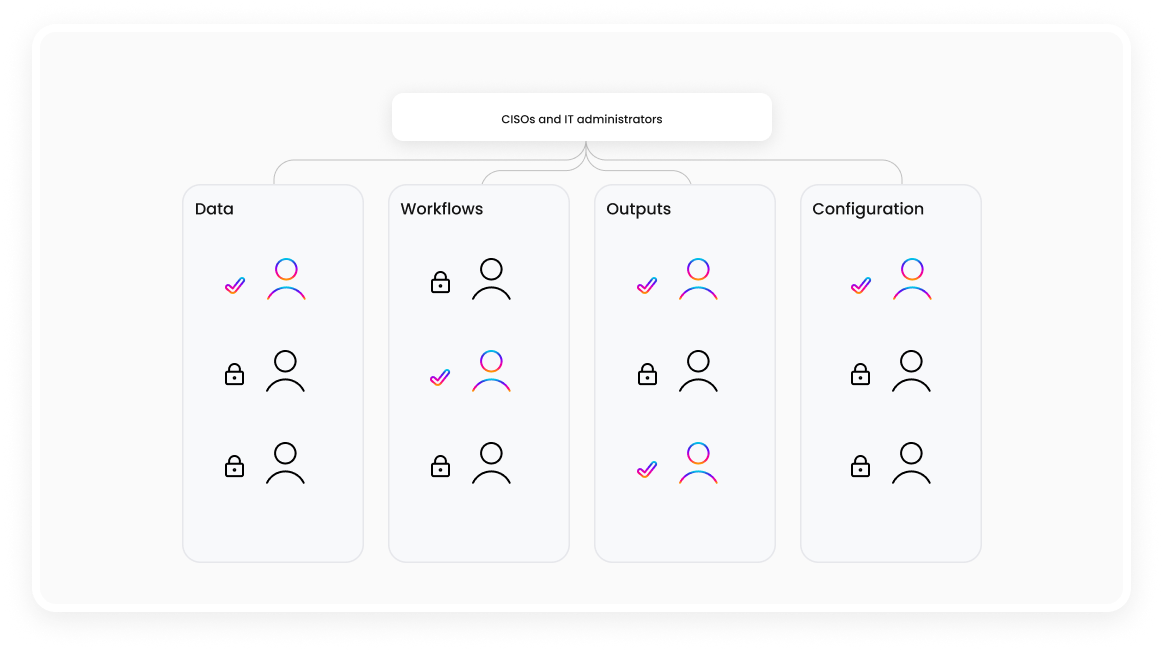

As AI usage expands, governance becomes mandatory. Without it, compliance gaps and misuse risks emerge.

This year, we introduced fine-grained governance controls across the Unframe platform. Explicit permission levels are enforced for every resource, with granular controls for sensitive data access. CISOs and IT administrators can define who can access data, run workflows, view outputs, or make configuration changes. All activity is auditable.

What this unlocked: Centralized control and auditability for AI usage across teams and use cases - enabling secure, compliant scale.

In 2026, we’re excited to build more AI solutions that are reliable, controllable, and ready to operate inside real enterprise workflows. Now more organizations will be able to move beyond experimentation and run AI as a core part of their operational infrastructure.

For insights on where enterprise AI is headed, check out 2026 predictions from our leadership.