Enterprise AI: Build or Buy?

Download Guide

When enterprise AI initiatives encounter resistance, many teams respond by adding more people. Forward Deployed Engineers (FDEs) have often been the answer, as a flexible bridge between technology and real-world implementation. They translate complexity into custom code and make systems work together, helping organizations move from prototypes to production. For years, this approach made sense. But as AI evolves, the needs of enterprises are changing.

An FDE typically combines engineering, consulting, and product expertise. Their mission is to embed within a customer’s environment and deliver tailored solutions that standard product teams might not cover.

FDEs often:

In essence, FDEs are “bridge builders” between product and customer. This role has been particularly useful in high-stakes domains where integration is complex and every deployment is unique.

In the early stages of enterprise AI, products often lacked the flexibility to adapt to diverse environments. FDEs helped organizations overcome that limitation by customizing software for each deployment. This was especially valuable when systems needed to interface with legacy data, manual processes, or compliance-heavy environments.

FDEs became a natural solution in situations where:

For a long time, this approach worked, especially when deployments were few and deal sizes were large.

The enterprise AI landscape is changing. Modern AI platforms are beginning to reason about context directly — understanding meaning, structure, and relationships within data without the same level of manual translation. In this new environment, FDE-heavy delivery models encounter challenges.

1. Data and Model Complexity Outpaces Human Integration

FDEs excel when variation lies in workflows. But AI systems introduce variation in data, models, and governance. Managing this complexity manually doesn’t scale. Automated, context-aware data and model layers are needed to keep up.

2. Slower Learning Loops

AI systems improve through continuous feedback and retraining. When human intermediaries sit between product and context, those loops slow down. Automated learning pipelines allow products to evolve faster and with greater precision.

3. Blurred Product Boundaries

Embedding engineers deeply in each customer’s workflow risks turning every deployment into a one-off build. For AI platforms meant to generalize and learn from aggregated experience, that limits scalability.

The new generation of enterprise AI platforms embeds contextual reasoning — through ontologies, semantic layers, and adaptive connectors — allowing systems to adapt automatically to each environment.

“The next era of enterprise AI won’t depend on forward deployment engineers to bridge context. It will depend on platforms that understand it intrinsically.” - Shay Levi, CEO, Unframe

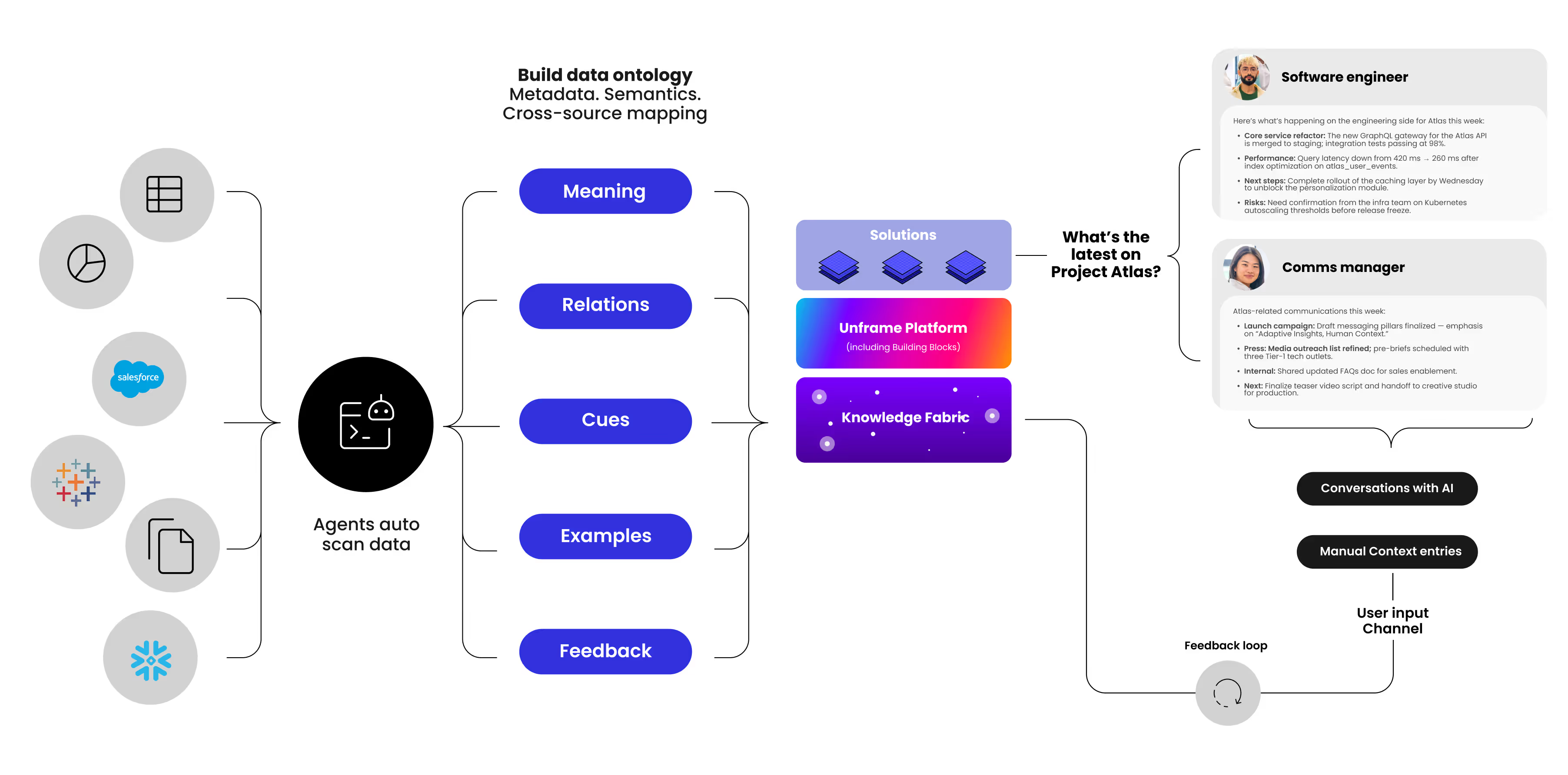

Unframe’s Knowledge Fabric turns enterprise context into a first-class part of the AI architecture. It connects data sources, business taxonomies, and operational metadata into a unified graph of meaning. This allows models, agents, and decision systems to reason about the enterprise itself — replacing much of the manual translation work once done by FDEs.

This shift from people-driven to platform-driven context enables repeatability, speed, and accuracy at scale.

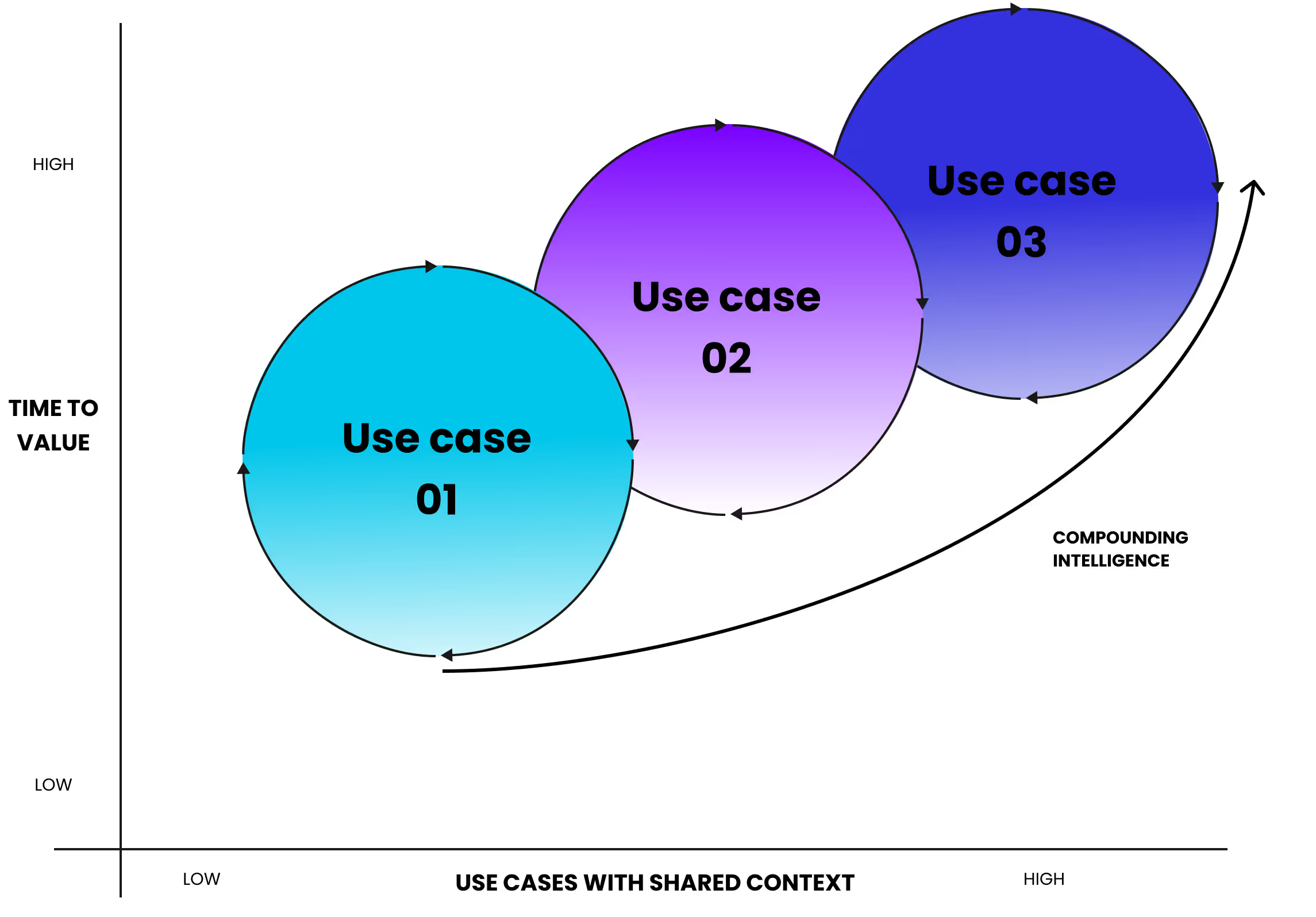

Relying on FDEs signals a product still in its “project” phase. Each hour an engineer spends adapting systems is a sunk cost that doesn’t transfer across customers. Modern context-aware platforms eliminate that dependency. They capture once and apply everywhere, so every new deployment strengthens the next.

When evaluating enterprise AI solutions, the key question is no longer “Do we need Forward Deployed Engineers?” but rather “Why does this system require them?”

If a platform depends on deep human embedding to function, it may not yet be ready for scalable, self-adapting enterprise use. The future belongs to AI systems that understand context intrinsically and apply that understanding across environments.

FDEs played a vital role when AI lacked contextual awareness. They helped bridge the gap between static products and dynamic enterprise realities. But today, tools can capture and reason about context directly. As AI platforms mature, the need for manual integration decreases — paving the way for scalable, intelligent, and self-adapting systems.