Enterprise AI initiatives fail less often due to model performance and more often due to platform economics that deteriorate over time.

This guide evaluates the total cost of ownership (TCO) of deploying enterprise-grade AI through two approaches:

A custom, internally built AI platform assembled from cloud services (e.g., Azure OpenAI, SageMaker), orchestration frameworks, vector databases, retrieval-augmented generation (RAG) pipelines, and bespoke governance layers. The enterprise owns architecture, operations, security, and long-term sustainability.

A managed AI delivery platform that provides production-ready AI solutions, with outcome-based pricing and full lifecycle ownership, including operations, governance, and ongoing optimization. Unframe converts AI from a platform liability into a managed enterprise capability with predictable economics.

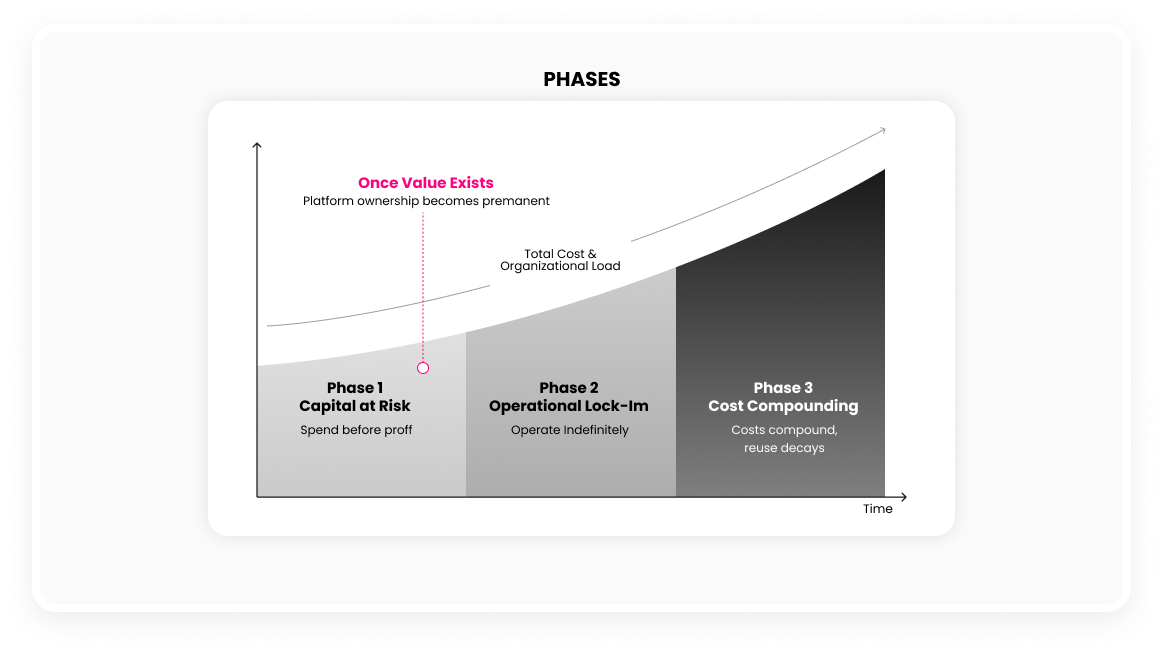

AI cost evolves across three operational phases that define real-world cost, risk, and sustainability.

DIY AI Stack

Before any business value is realized, a DIY AI stack requires organizations to commit capital and architectural decisions without real usage signals.

This includes designing and operationalizing:

From a technical perspective, this is distributed platform engineering.

Observed enterprise impact:

Industry analyses consistently show that building a production-grade generative AI agent typically costs $600K–$1.5M before meaningful value is proven.

This creates a high-risk capital profile: spend first, learn later.

Unframe

Unframe inverts Phase-1 economics by preserving optionality. Instead of capitalizing a platform up front, enterprises validate outcomes first:

Pricing (annualized, all-inclusive):

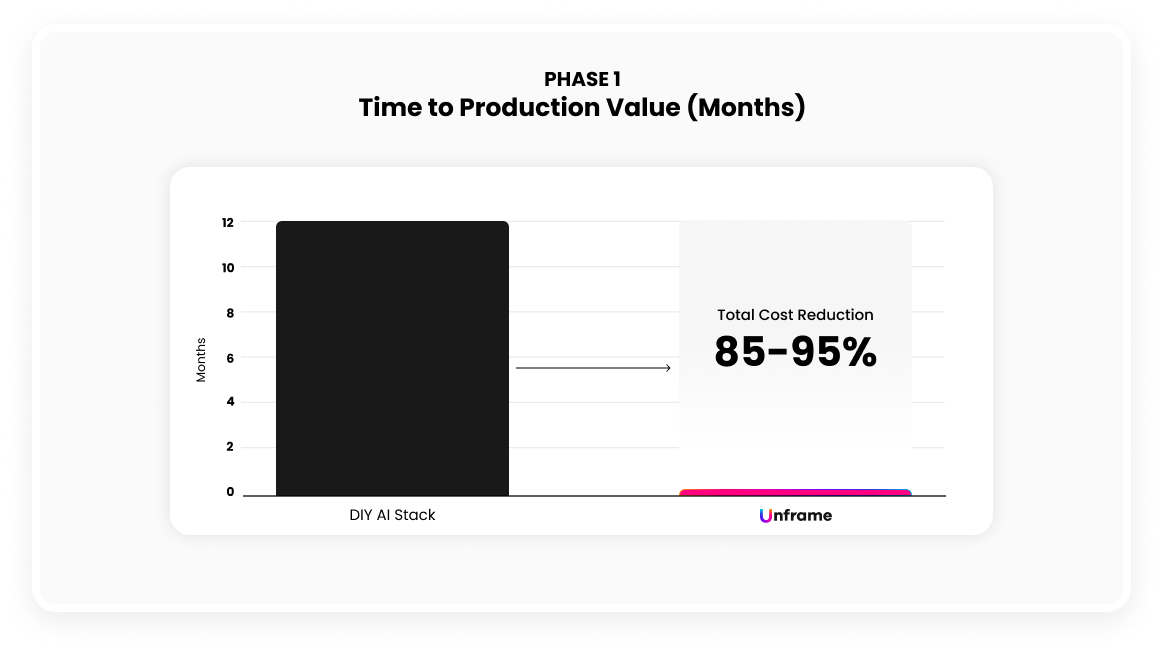

Phase 1 TCO Impact

Unframe vs DIY AI Stack: 85–95%

Unframe shifts Phase-1 from capital expenditure to controlled validation.

This reduction is achieved by eliminating entire cost categories:

Phase-1 cost is not limited to engineering spend or cloud consumption; it is the time-bound commitment of capital and organizational attention before any production signal exists. In a DIY AI stack, this window typically spans multiple quarters, during which architectural decisions and budgets are locked in without real usage feedback.

Unframe compresses this exposure to days, allowing solution validation before capital, teams, and long-term operating assumptions are committed.