Enterprise AI: Build or Buy?

Download Guide

Messy data isn’t the enemy of enterprise AI…waiting to make data perfectly organized is. The fastest-moving organizations build AI that works with distributed, fragmented data in real time instead of making everyone hold their breath for endless consolidation projects.

The meeting always starts the same way. Someone presents the AI roadmap. The executive sponsor asks about timeline. And then a well-meaning voice from the back offers the fatal suggestion…"Shouldn't we get our data in order first?"

What follows is predictable. The AI initiative gets paused while the organization launches a data consolidation project. Eighteen months later, the warehouse is still under construction, the original AI use cases have been deprioritized, and competitors who skipped the consolidation step are already running AI in production.

Regardless of intentions, the consolidation-first approach doesn't just delay AI. It often kills it entirely.

Here's the contrarian view that more enterprises need to hear – the goal was never to eliminate data silos. It was to make AI effective despite them. The organizations deploying AI successfully aren't waiting for perfect data. They're building AI for siloed data as it actually exists, and always will. Messy, distributed, and constantly changing.

The logic behind consolidation sounds reasonable on the surface. Every best practice from the experts is that AI needs clean, unified data. And your data lives in dozens of disconnected systems. Therefore, step one is bringing that data together into a single repository where AI can access it consistently.

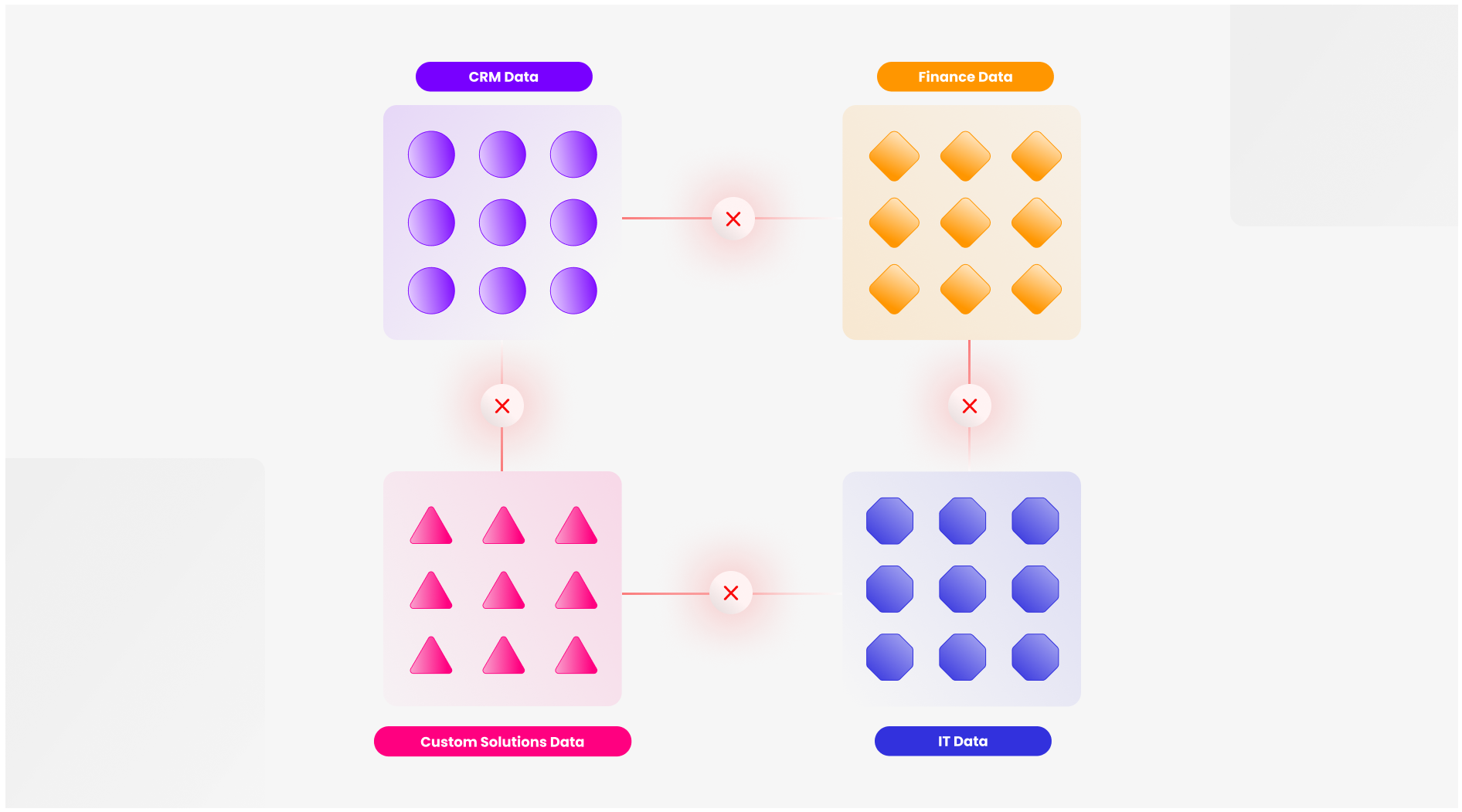

But this thinking comes from an earlier era. When the primary use case was building dashboards and running reports, consolidated schemas made sense. However, AI doesn't work like dashboards. An agent answering a question needs to pull context from your CRM, your document repository, your Slack history, and your ERP simultaneously. No warehouse contains all of these with current data.

A workflow automation needs to react to events as they happen, not batch extracts that were fresh yesterday morning. The latency inherent in consolidation architectures makes them structurally unsuited for production AI. And the numbers tell the story. 74% of businesses will invest in AI this year - but data quality issues threaten their ambitions.

Production AI requires three things from enterprise data. It needs to connect context across systems, organize business meaning, and receive fresh information at runtime. Consolidation addresses only the first requirement, and does it poorly.

Connection through consolidation means extracting data from source systems, transforming it into a common schema, and loading it into a central repository. Every step introduces latency. Every schema change in a source system requires pipeline updates. Every new data source requires new integration work. The maintenance burden grows with each system you connect, and enterprise environments typically include dozens or hundreds of systems generating relevant data.

Consolidation fails entirely on the second requirement. Business meaning isn't something you can extract and load. It lives in the relationships between entities, the context around events, the institutional knowledge that tells you why a particular customer record matters or what a specific contract clause implies. Warehouses store data. They don't preserve the semantic layer that AI needs to reason correctly.

The third requirement exposes the fundamental mismatch. AI systems making decisions need current information. An agent handling a customer inquiry needs to know about the email that arrived five minutes ago, not the one captured in last night's batch load. The architecture that consolidation creates is optimized for historical analysis, not real-time intelligence.

Before accepting that silos must be eliminated, it's worth asking why they exist in the first place. The answer isn't organizational dysfunction or technical debt. Silos reflect real structural requirements that aren't going away.

Compliance boundaries create silos by design. Financial data lives in systems with audit controls that HR systems don't need. Customer PII requires protections that operational metrics don't warrant. These separations aren't accidents to be fixed. They're intentional architecture that serves regulatory and security purposes.

Best-of-breed tooling creates silos as a side effect of good decisions. Your sales team uses Salesforce because it's the best CRM for their workflow. Your engineers use Jira because it's the best issue tracker for theirs. Your finance team uses NetSuite because it handles their specific requirements. Eliminating these silos would mean replacing specialized tools with a monolithic platform that does everything poorly, a trade nobody actually wants to make.

The enterprise that claims to have "eliminated silos" has really just created new ones. The data warehouse becomes another silo. The data lake becomes another silo. The consolidation layer itself becomes a system that must be integrated with everything else.

Teams that recognize this reality early, the ones building AI-ready data practices, stop trying to solve an unsolvable problem and start working with the environment that actually exists.

The alternative to consolidation isn't chaos. It's runtime unification. That means connecting to data where it lives and assembling context on demand for each specific use case.

Instead of copying data into a central repository, the system maintains live connections to source systems. This way data is owned by domain teams who know their own data the best - treating data as a product within the org so that it can be consumed by other departments. Your Salesforce data stays in Salesforce. Your documents stay in SharePoint. Your transactional records stay in your ERP. The AI layer can access all of them without requiring any of them to move.

The second component is per-use-case organization. Rather than building a universal schema that tries to represent everything, effective AI data management defines context models specific to each application. For a contract analysis use case, this means mapping the entities, relationships, and signals that matter for contracts. For a customer service use case, it means different entities, different relationships, different signals. Each AI application gets exactly the context it needs, assembled from the relevant sources.

The third component is runtime serving. When an AI system needs to answer a question or make a decision, the data foundation assembles the required context at that moment. Not from a batch extract. Not from a cached snapshot. From the live systems where the data currently resides. This eliminates the latency problem that makes consolidated architectures unsuitable for production AI.

Critically, governance travels with the data in this model. The access controls you've defined in Salesforce apply when AI accesses Salesforce data. The permissions in SharePoint apply when AI accesses documents. You don't rebuild your governance model in a separate layer. The security architecture you've already invested in carries forward.

This is how data extraction and abstraction works at enterprise scale. Not by centralizing everything first, but by making distributed data accessible and meaningful for AI at the moment it's needed.

The enterprises deploying AI successfully in 2026 aren't the ones with the cleanest data architectures. They're the ones that stopped treating data cleanup as a prerequisite for AI deployment.

The adaptive data foundation approach lets you deploy production AI now, against your data as it actually exists, without a multiyear prerequisite.

Every month spent on data consolidation before AI deployment is a month your competitors are learning from production AI. They're discovering which use cases deliver value, which need refinement, and how to operate AI reliably in their specific environment. That learning compounds. The gap widens.

The silos aren't going anywhere. Your AI strategy shouldn't depend on them disappearing. Let us help you streamline your success with AI. Schedule a meeting.