Enterprise AI: Build or Buy?

Download Guide

At work, employees expect answers the same way they get them from consumer AI. But most enterprise search solutions are behind; they deliver documents instead of understanding the context and intent behind them. Real conversational knowledge access requires a context-rich knowledge fabric, not just a retrieval chatbot on top of search.

It’s kind of surreal how pervasive AI has become in daily lives. Many of your employees use ChatGPT to plan vacations, draft emails, and debug code at home. But this ironically is not the experience they get when they come to work. Typing keywords into a search box generally returns 4,000 documents. This is the unfortunate state of enterprise knowledge access in 2026.

Consumer AI has trained everyone to expect conversational interactions. They ask a question and get an answer. Meanwhile, most organizations are still forcing employees to search, sift, open, read, compare, and synthesize across multiple systems just to answer a straightforward question.

According to a 2024 survey by Pryon and Unisphere Research, 70% of employees spend one hour or more finding a single piece of information. Nearly a quarter spend more than five hours. This isn't a search relevance problem. It's a fundamental mismatch between how people want to access knowledge and how enterprises deliver it.

And the gap isn't technical capability. It's architectural. Consumer AI doesn't need to respect permissions, trace sources, or ground answers in proprietary business context. Enterprise AI does. That's why bolting a chatbot onto your intranet doesn't solve the problem. And why most enterprise conversational AI deployments disappoint.

Enterprise search was built for a different era. It assumes users know exactly what they're looking for, can formulate precise queries, and have time to evaluate results. None of those assumptions hold anymore.

The culprit isn't bad search technology. It's the paradigm itself. Enterprise search returns documents, not answers. Even when results are perfectly ranked, users still have to open files, read through content, compare across sources, and synthesize the actual answer themselves. The search engine's job ends where the hard work begins.

Keyword matching compounds the problem. Users fail when they don't know the right terminology, when information lives under unexpected labels, or when answers require connecting dots across multiple sources. Ask about "customer churn" when the data is labeled "attrition metrics" and you get nothing. And good luck if you ask a question that requires synthesizing sales data, support tickets, and contract terms.

Enterprise search vendors have spent two decades optimizing relevance ranking. They've been solving the wrong problem. Even perfectly ranked results still leave users doing all the cognitive labor of synthesis. The issue was never finding documents. It was getting answers.

Here's the shift that matters. Users don't want to search, they want answers.

The question isn't "Where is the Q3 revenue report?" It's "What was our revenue in Q3, and how did it compare to forecast?" The first question returns a document. The second question requires understanding, context, and synthesis across multiple data sources. Traditional search handles the first question adequately. It completely fails the second.

This expectation isn't going away. The experience of asking questions in natural language and receiving coherent answers is becoming baseline. Employees who use conversational AI for personal tasks are increasingly expecting the same experience at work. And they'll route around systems that don't deliver it.

But enterprise knowledge access isn't consumer search with a logo change. It requires capabilities that ChatGPT was never designed to provide. Permission awareness, so users only get answers from data they're authorized to see. Source attribution, so answers are traceable and verifiable. Business context, so the AI understands your terminology, your processes, your organizational structure. And auditability, so regulated industries can demonstrate what was asked and what was answered.

This is why "just add a chatbot" doesn't work. Conversational agents for enterprise knowledge access require architecture that consumer AI lacks entirely.

Most enterprise chatbot deployments follow a predictable pattern. Take existing search infrastructure, add an LLM layer, deploy a conversational interface, and hope for the best.

The results are equally predictable. Hallucinations grounded in nothing. Answers that ignore permission boundaries. Inability to trace where information came from. Users quickly learn they can't trust the responses, adoption craters, and the project gets quietly shelved.

The numbers bear this out. Research from Deloitte shows that 77% of businesses express concern about AI hallucinations. More troubling, Forbes stated 47% of enterprise AI users made at least one major decision based on hallucinated content in 2024. When the conversational interface is confident and fluent but the underlying architecture can't actually ground responses in real data, bad decisions follow.

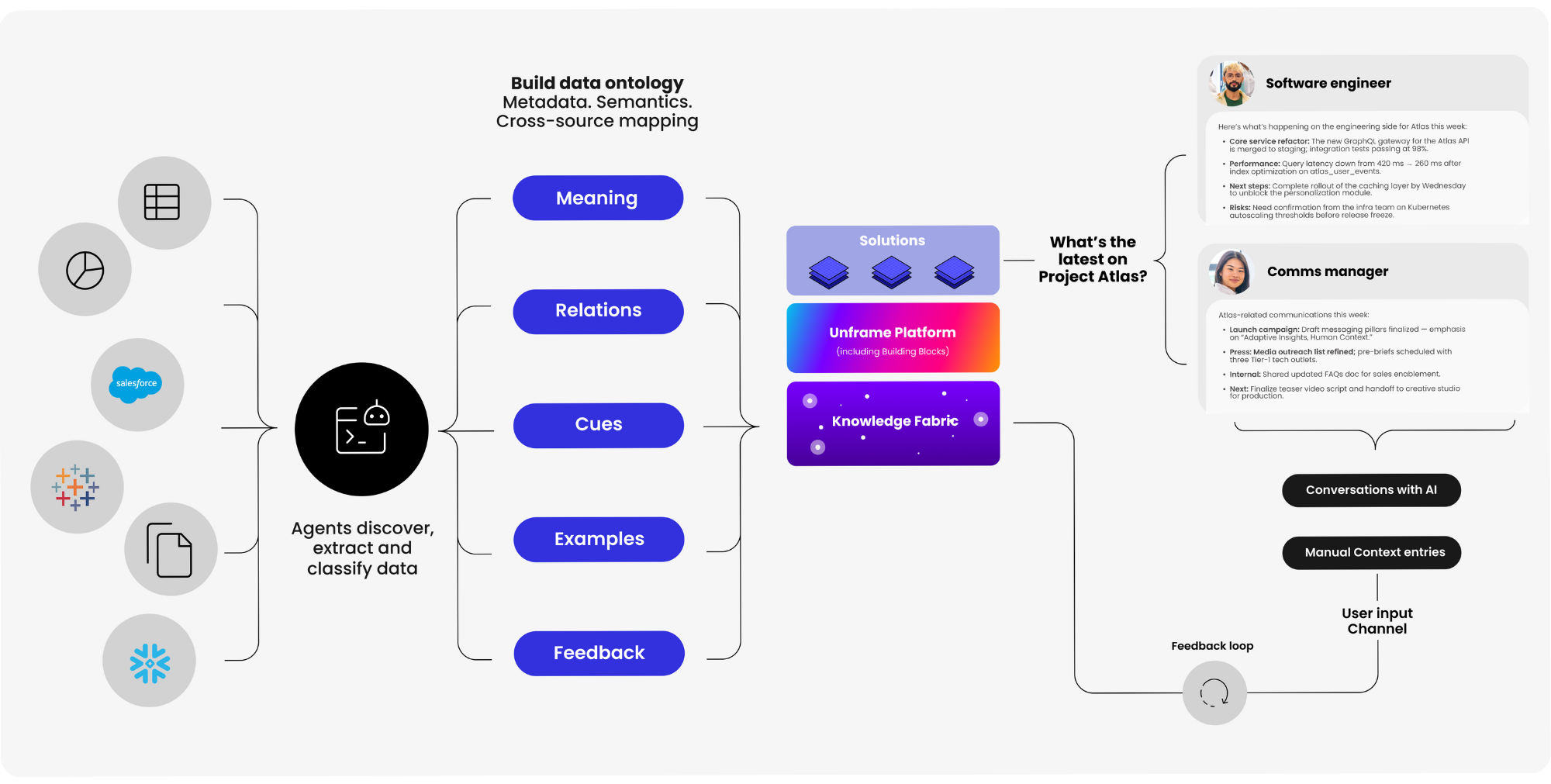

What's actually required is a semantic layer that understands connections across data sources. A knowledge fabric that makes AI fluent in business context before the conversational interface ever touches it. Real-time permission enforcement at the query level. Source citations that users can verify. Business context that adapts as the organization evolves. Without this foundation, conversational agents are just articulate liars.

The enterprises getting real value from conversational AI have invested in the foundation layer first. For example, with a Knowledge Fabric instance from Unframe, your organization gets a semantic layer purpose-built for AI.

You define the use case. Unframe connects your systems, models the relationships that matter, and serves unified context to your AI applications without requiring you to centralize or migrate data first. The result is AI that understands your business language and reasons across your entire data landscape.

When a conversational agent queries this layer, answers are grounded in actual business context rather than pattern-matched text fragments.

Consider what this looks like in practice. A portfolio manager asks: "What's our exposure to the healthcare sector, and are there any compliance concerns I should know about?"

Traditional enterprise search returns documents matching "healthcare," "exposure," and "compliance." The user gets a results page with position reports, regulatory filings, and internal memos.

A chatbot layered on basic search might generate a plausible-sounding answer by summarizing retrieved fragments. But without understanding how these documents relate to each other, the response could be incomplete, outdated, or simply wrong.

A conversational agent built on Knowledge Fabric synthesizes position data from the trading system, risk metrics from the compliance platform, and recent regulatory updates from the legal repository. It understands these are related, respects permission boundaries, and delivers a coherent answer with citations to each source. The user can verify any claim by clicking through to the underlying data.

Employees aren't waiting for IT to catch up. When enterprise systems force them into keyword-search-and-sift workflows, they find workarounds. Often by using consumer AI tools that don't respect enterprise data governance. Which means shadow AI proliferates not because employees are careless, but because official systems don't meet their basic expectations for knowledge access.

The question isn't whether to adopt conversational agents for enterprise knowledge access. It's whether to do it with the right architecture or watch uncontrolled alternatives fill the gap.

Your employees already know how to ask questions. The question is whether your enterprise can answer them.

Unframe's enterprise search solutions deliver conversational knowledge access built on a Knowledge Fabric foundation. Grounded answers, not hallucinations. See how it works.